Centric Pricing & Inventory

Increase Profitability with AI-Powered Predictive Pricing and Inventory

Maximize margins by optimizing pricing to influence demand.

Accelerate growth by aligning customer demand with accurate supply.

Sell more with less inventory and reduce discounting.

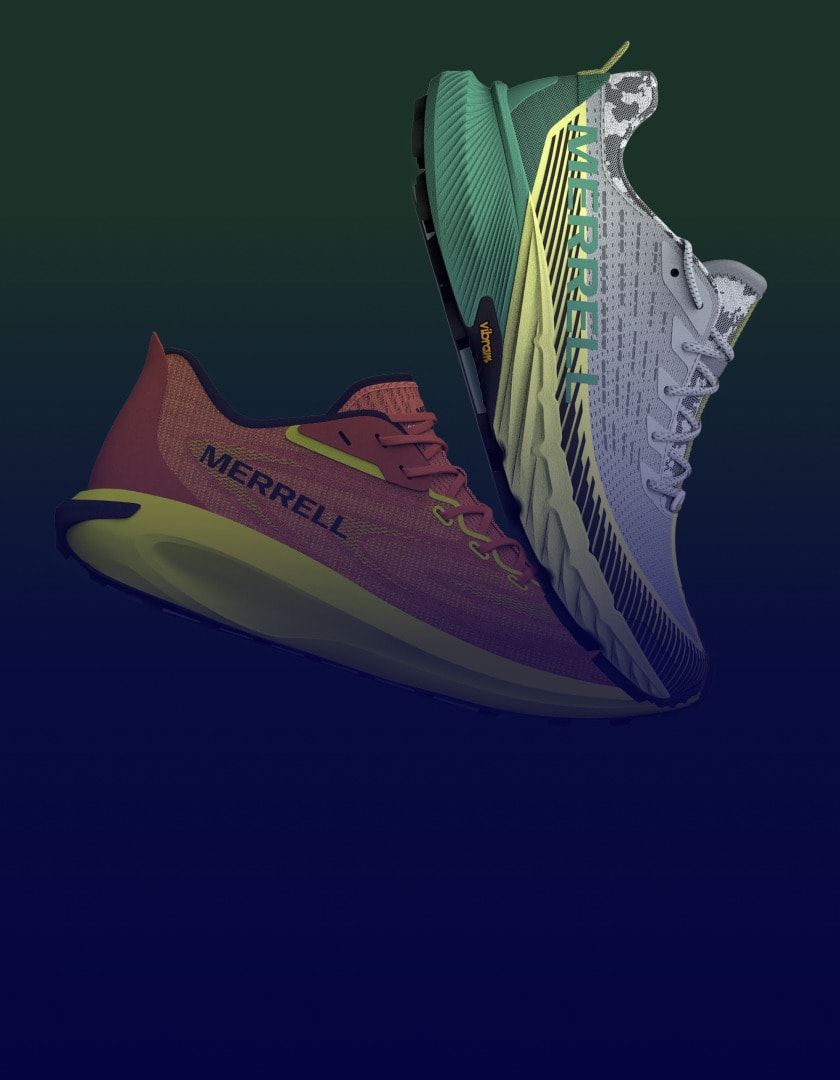

Designed for the world’s top fashion, apparel and home brands and retailers

including omnichannel, wholesaler and e-commerce.

Make concrete impact with fast time to value for fashion, apparel and footwear brands and retailers

6-18%

growth in sales

4-15%

improved gross margin

5-30%

reduced working capital

50%

decrease in personnel costs

Optimize Pricing and Inventory for Maximized Revenue

Accelerate In-Season Decision Making with Centric.

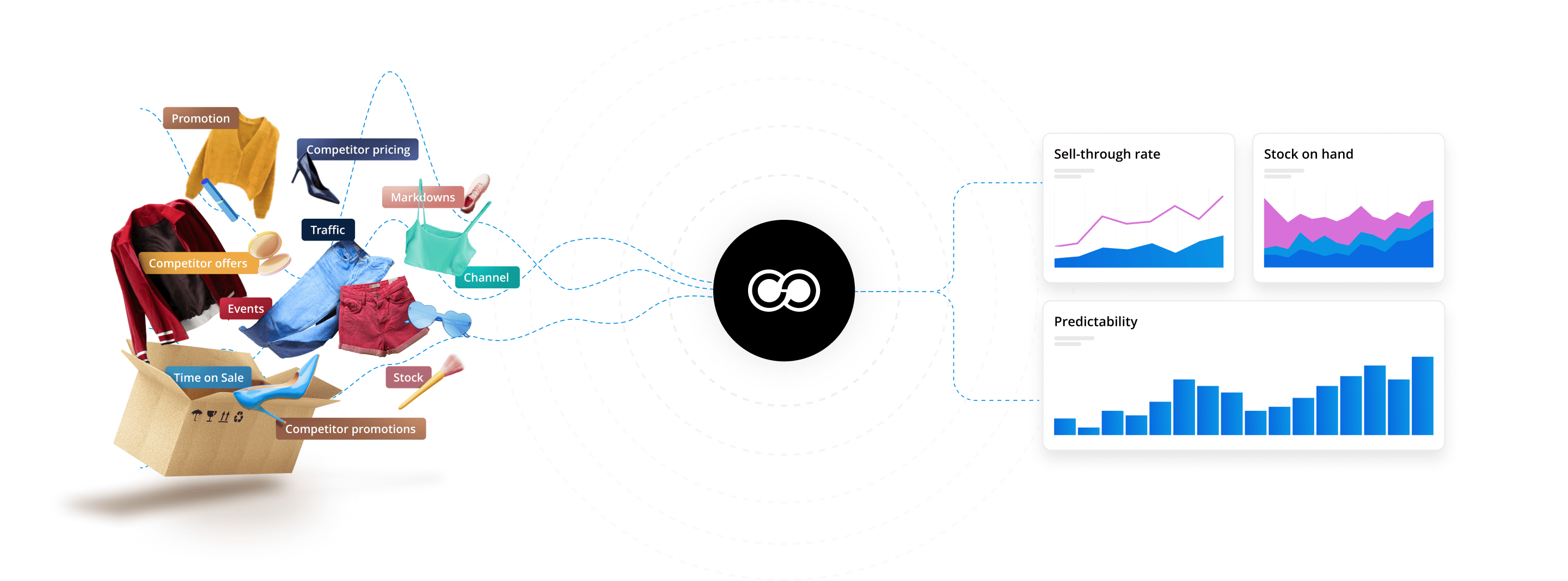

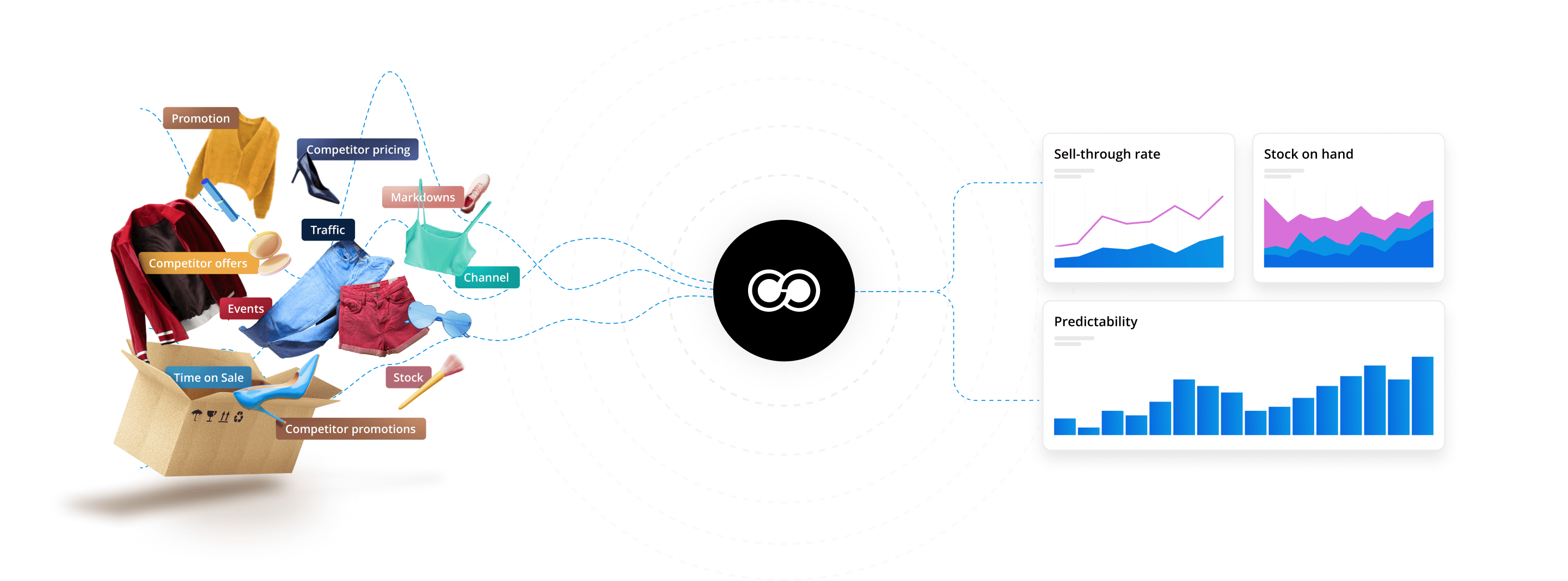

With margin pressure on every single SKU, brands and retailers can no longer make pricing and inventory decisions based on the past.

Trying to manually find and maintain optimal pricing, promotion and inventory throughout a product’s lifecycle for 1000s of products is time consuming and error prone. Further complicated by the growing number of influencing factors that need to be considered, it’s impossible to manage on spreadsheets and without the use of AI automation tools.

Even rule-based pricing doesn’t go far enough, with pricing and inventory decisions based on historical, backward oriented data, which consistently leads to sub-optimal outcomes.

Powerful AI enables forward-facing forecasts, intelligent pricing and inventory recommendations and the automation of tasks to optimize every product, in every location and channel to increase sell through, reduce inventory and maximize profits.

"Thanks to Centric’s AI automation tools, the markdowns happen sooner and in smaller increments. This results in a flatter reduction curve and in the end, a better margin in terms of the entire lifecycle of the product."

Create, influence and fulfill demand.

Move away from product shortages and surpluses to balanced inventory levels, higher sell-through and increased margin.

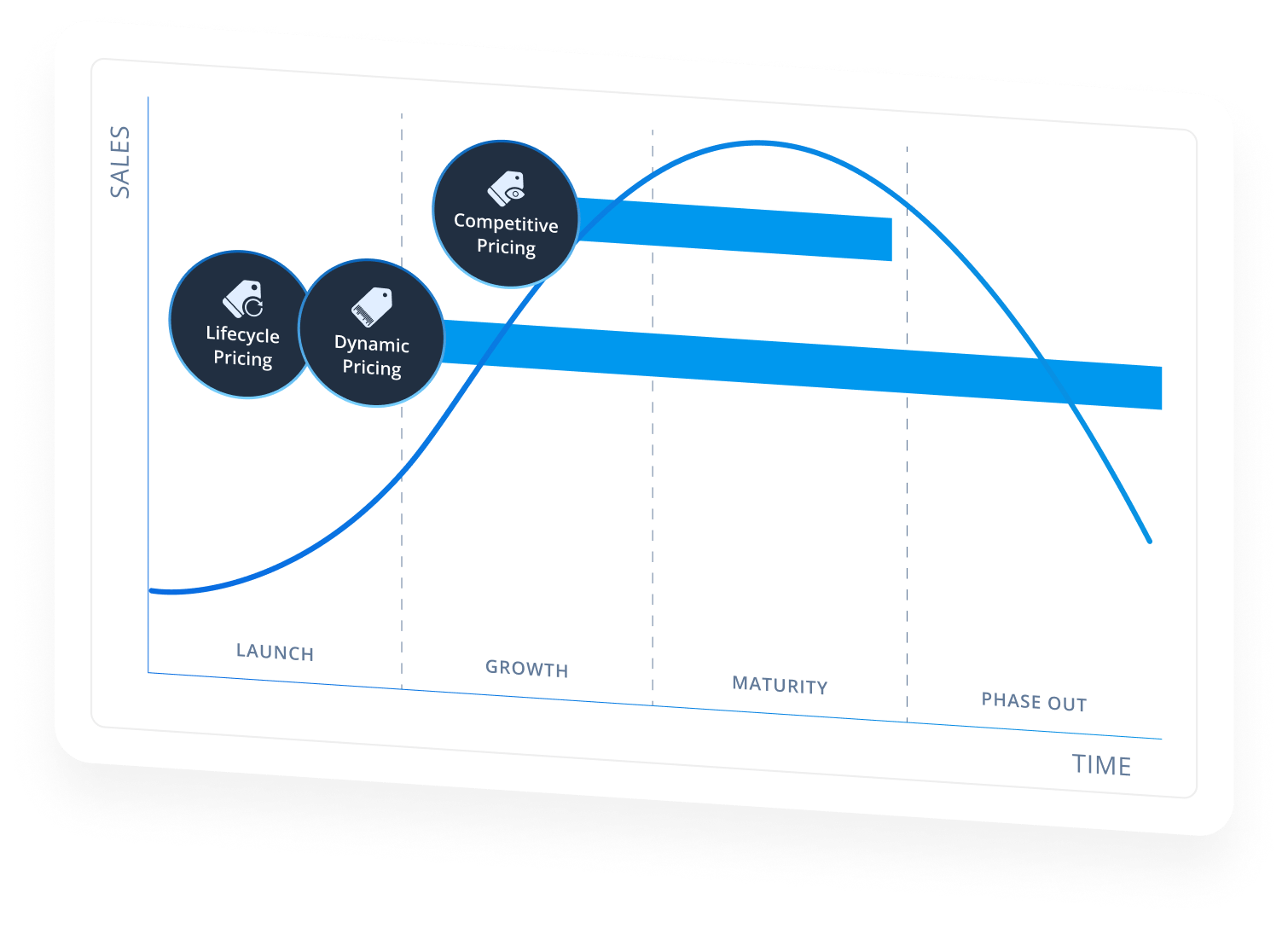

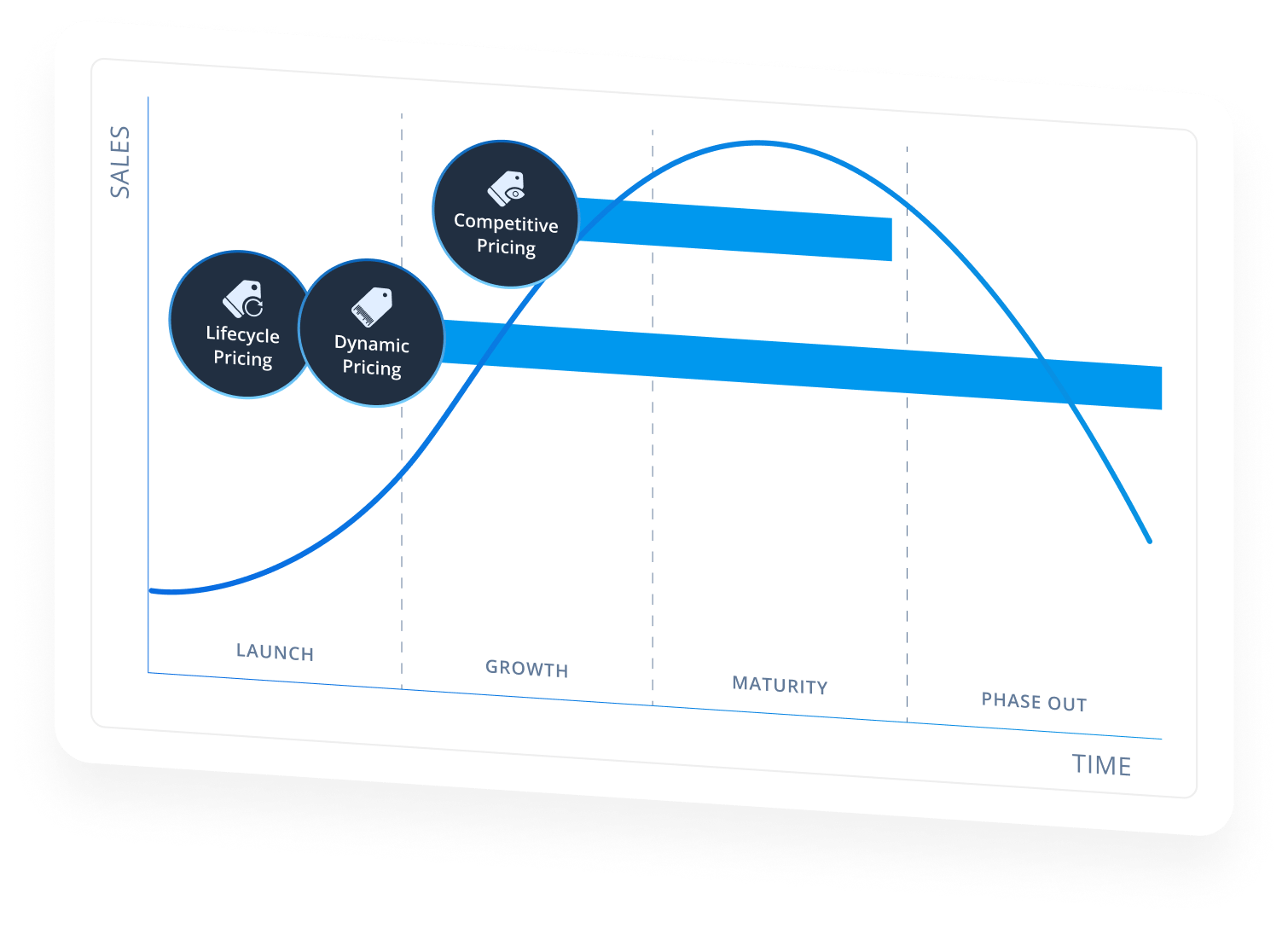

Price Optimization

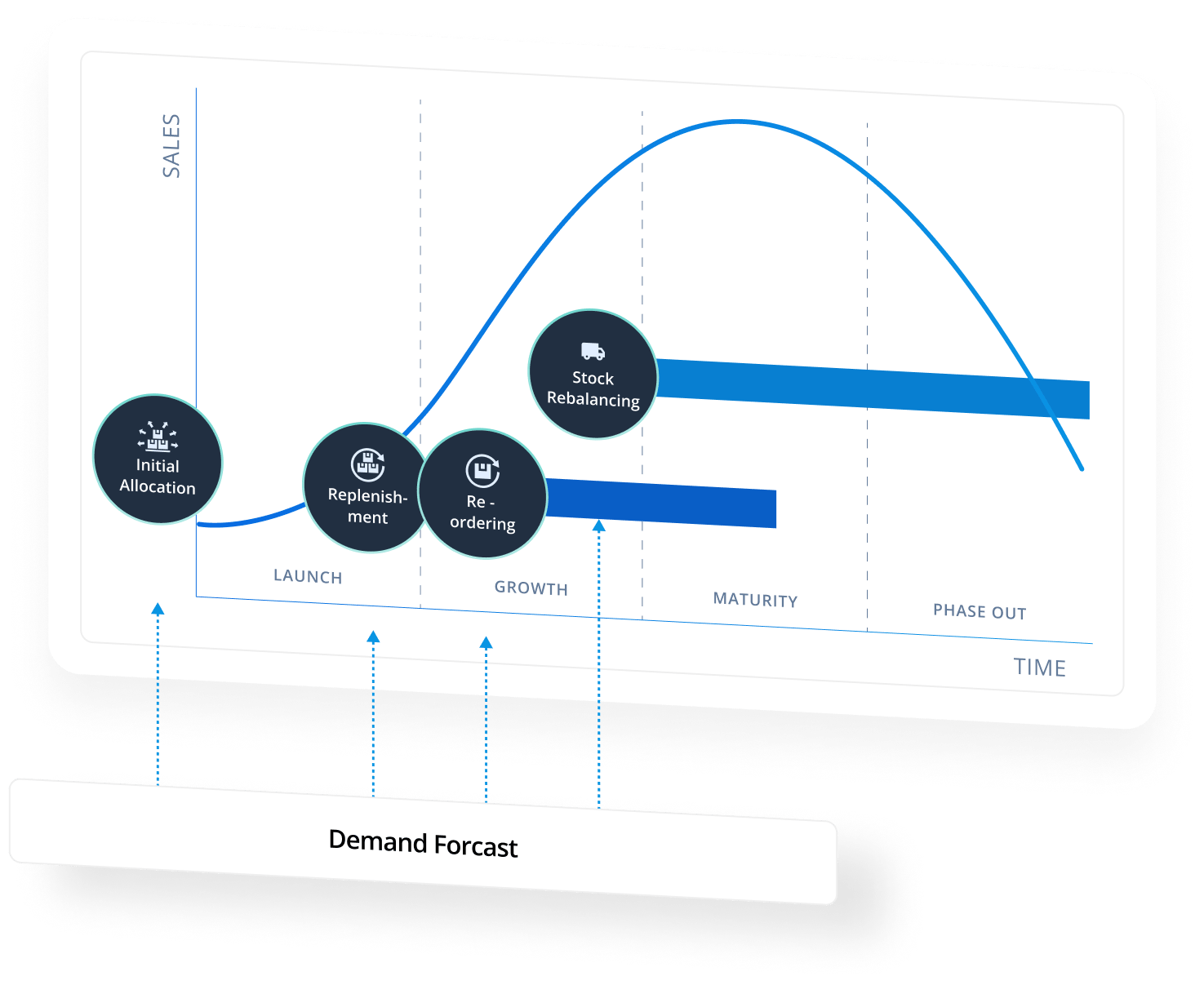

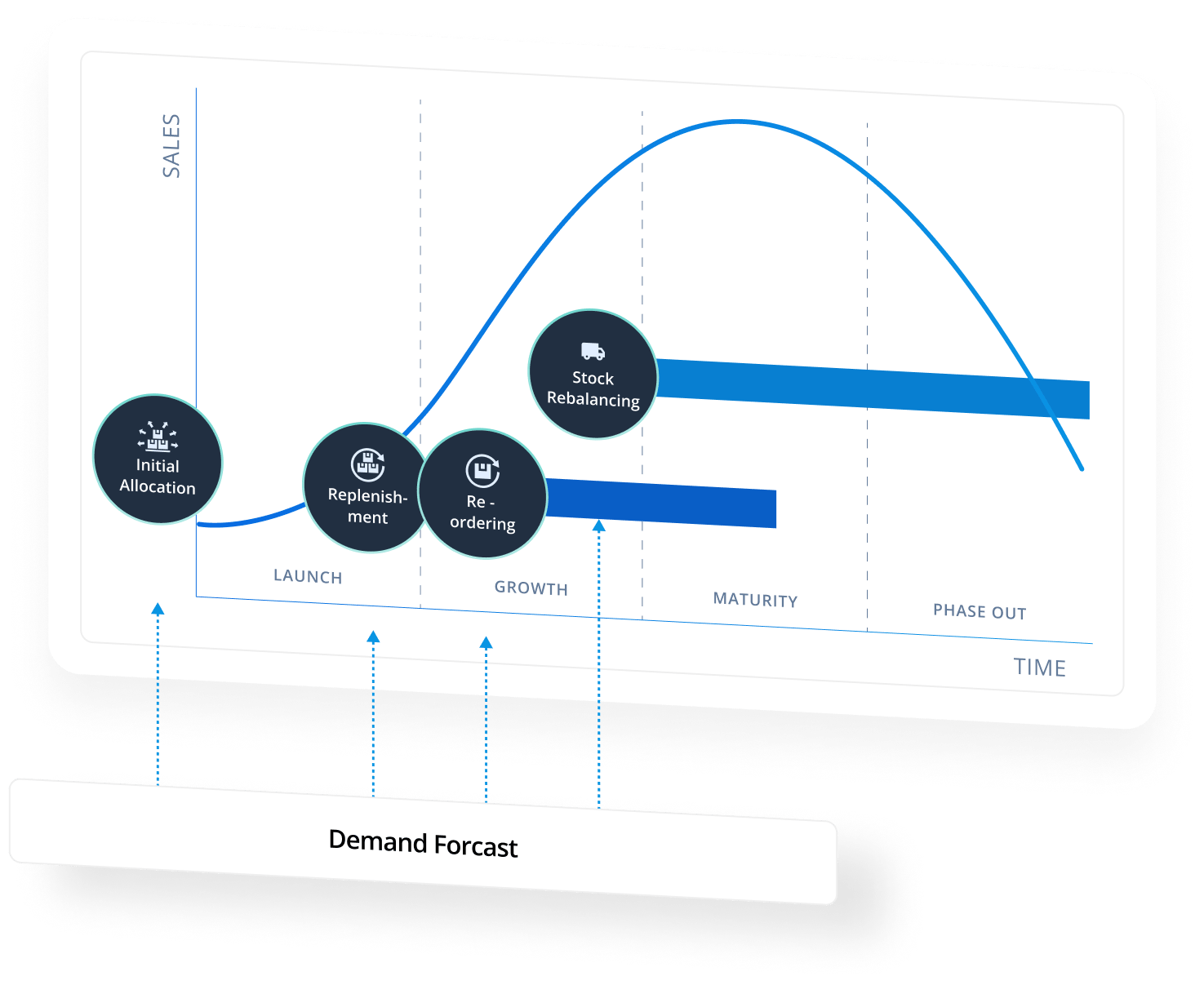

Inventory Optimization

Develop the optimal price for seasonal products from launch, all the way through to markdowns.

Quickly adjust e-commerce pricing for infinite stock items based on market reactions.

Compare pricing against competitors for key items and automate price changes in response to competitor strategies.

Automate, based on demand forecast, the initial supply of new and seasonal articles across stores, channels and locations.

Enable demand-driven replenishment to ensure a continuous supply of articles to prevent stockouts while avoiding over purchase of inventory.

Balance stock across the entire business, find profitable transfer options and identify the best locations to sell to reduce the need for discounting.

Discover how teams increase profitability with our Pricing & Inventory platform.

Request a demo

Want to learn more about Centric Pricing & Inventory?

A flexible and scalable digital foundation for growth

Explore Centric’s AI market-driven solutions

Optimize each step of bringing a product to market, whether at the pre-season, in-season or end-of-season cycle. Streamline processes, reduce costs, maximize profitability and drive sustainability.